- [실습] 1. K8S 인증/인가2024년 04월 13일

- yeongki0944

- 작성자

- 2024.04.13.:07

1. EKS 배포, Grafana, Kubeopsview, promtheus 설치

원클릭 설치파일 - 클라우드포메이션

eks-oneclick5-us-east-1.yaml0.02MB기본설정

# default 네임스페이스 적용 kubectl ns default # 노드 정보 확인 : t3.medium kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone # ExternalDNS MyDomain=myeks.net echo "export MyDomain=myeks.net" >> /etc/profile MyDomain=gasida.link echo "export MyDomain=gasida.link" >> /etc/profile MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text) echo $MyDomain, $MyDnzHostedZoneId curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f - # kube-ops-view helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}' kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain" echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5" # AWS LB Controller helm repo add eks https://aws.github.io/eks-charts helm repo update helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \ --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller # gp3 스토리지 클래스 생성 kubectl apply -f https://raw.githubusercontent.com/gasida/PKOS/main/aews/gp3-sc.yaml # 노드 IP 확인 및 PrivateIP 변수 지정 N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=us-east-1a -o jsonpath={.items[0].status.addresses[0].address}) N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=us-east-1b -o jsonpath={.items[0].status.addresses[0].address}) N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=us-east-1c -o jsonpath={.items[0].status.addresses[0].address}) echo "export N1=$N1" >> /etc/profile echo "export N2=$N2" >> /etc/profile echo "export N3=$N3" >> /etc/profile echo $N1, $N2, $N3 # 노드 보안그룹 ID 확인 NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text) aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32 aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.200/32 # 워커 노드 SSH 접속 for node in $N1 $N2 $N3; do ssh -o StrictHostKeyChecking=no ec2-user@$node hostname; done for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; doneprometheus-grafana 스택 설치

# 모니터링 kubectl create ns monitoring watch kubectl get pod,pvc,svc,ingress -n monitoring # 사용 리전의 인증서 ARN 확인 : 정상 상태 확인(만료 상태면 에러 발생!) CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text` echo $CERT_ARN # repo 추가 helm repo add prometheus-community https://prometheus-community.github.io/helm-charts # 파라미터 파일 생성 cat <<EOT > monitor-values.yaml prometheus: prometheusSpec: podMonitorSelectorNilUsesHelmValues: false serviceMonitorSelectorNilUsesHelmValues: false retention: 5d retentionSize: "10GiB" storageSpec: volumeClaimTemplate: spec: storageClassName: gp3 accessModes: ["ReadWriteOnce"] resources: requests: storage: 30Gi ingress: enabled: true ingressClassName: alb hosts: - prometheus.$MyDomain paths: - /* annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/ssl-redirect: '443' grafana: defaultDashboardsTimezone: Asia/Seoul adminPassword: prom-operator ingress: enabled: true ingressClassName: alb hosts: - grafana.$MyDomain paths: - /* annotations: alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/ssl-redirect: '443' persistence: enabled: true type: sts storageClassName: "gp3" accessModes: - ReadWriteOnce size: 20Gi defaultRules: create: false kubeControllerManager: enabled: false kubeEtcd: enabled: false kubeScheduler: enabled: false alertmanager: enabled: false EOT cat monitor-values.yaml | yh # 배포 helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 57.1.0 \ --set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \ -f monitor-values.yaml --namespace monitoring # 확인 ## alertmanager-0 : 사전에 정의한 정책 기반(예: 노드 다운, 파드 Pending 등)으로 시스템 경고 메시지를 생성 후 경보 채널(슬랙 등)로 전송 ## grafana : 프로메테우스는 메트릭 정보를 저장하는 용도로 사용하며, 그라파나로 시각화 처리 ## prometheus-0 : 모니터링 대상이 되는 파드는 ‘exporter’라는 별도의 사이드카 형식의 파드에서 모니터링 메트릭을 노출, pull 방식으로 가져와 내부의 시계열 데이터베이스에 저장 ## node-exporter : 노드익스포터는 물리 노드에 대한 자원 사용량(네트워크, 스토리지 등 전체) 정보를 메트릭 형태로 변경하여 노출 ## operator : 시스템 경고 메시지 정책(prometheus rule), 애플리케이션 모니터링 대상 추가 등의 작업을 편리하게 할수 있게 CRD 지원 ## kube-state-metrics : 쿠버네티스의 클러스터의 상태(kube-state)를 메트릭으로 변환하는 파드 helm list -n monitoring kubectl get pod,svc,ingress,pvc -n monitoring kubectl get-all -n monitoring kubectl get prometheus,servicemonitors -n monitoring kubectl get crd | grep monitoring kubectl df-pv

그라파나 대시보드 추천 15757 17900 15172

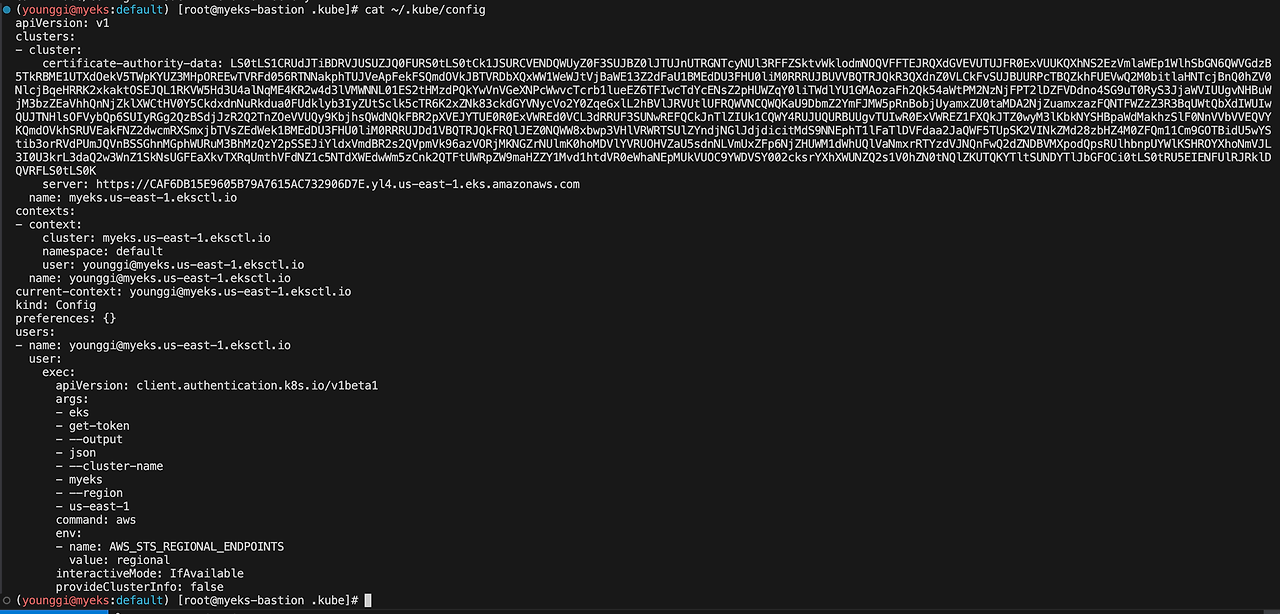

cat ~/.kube/config

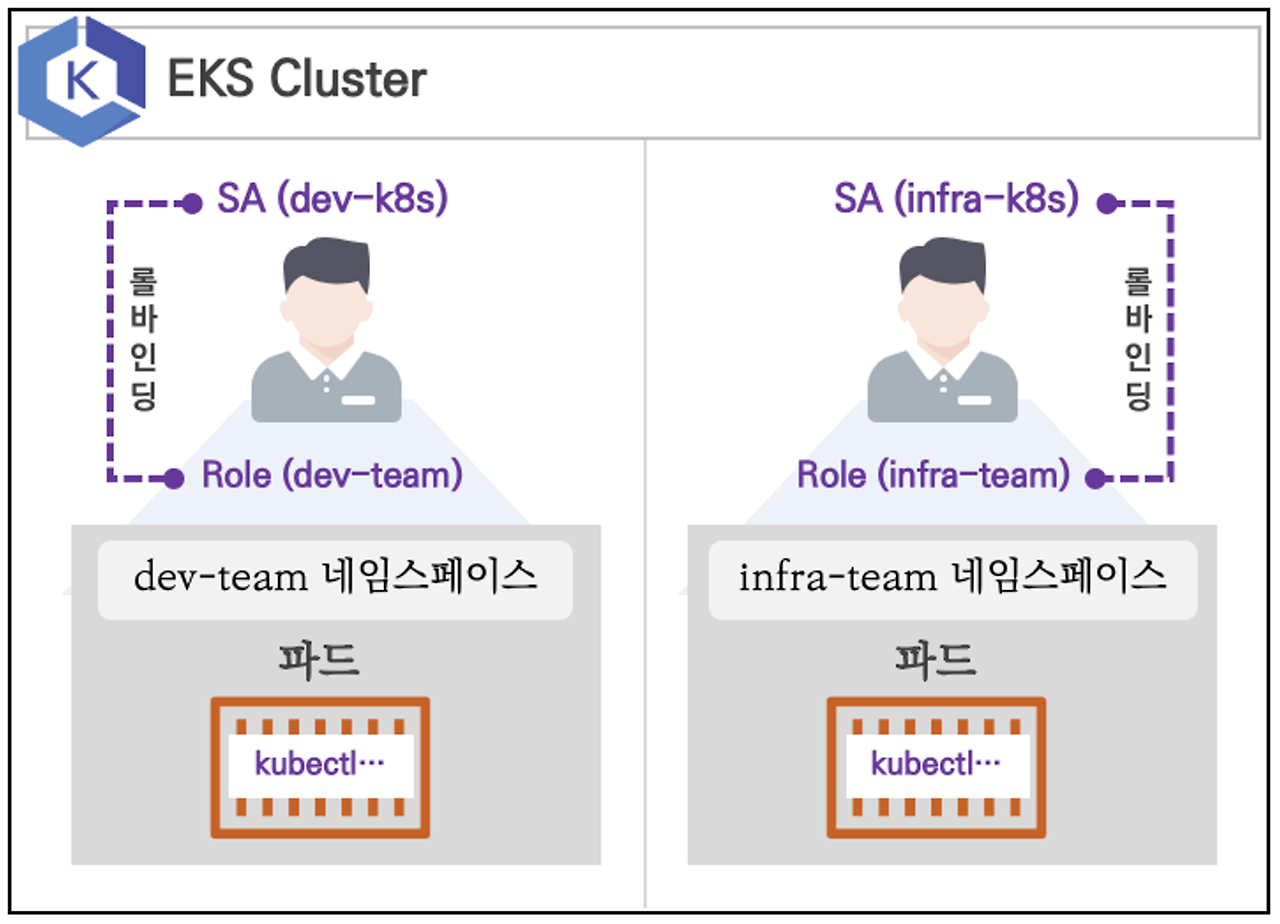

2. 실습 환경

- 쿠버네티스에 사용자를 위한 서비스 어카운트(Service Account, SA)를 생성 : dev-k8s, infra-k8s

- 사용자는 각기 다른 권한(Role, 인가)을 가짐 : dev-k8s(dev-team 네임스페이스 내 모든 동작) , infra-k8s(dev-team 네임스페이스 내 모든 동작)

- 각각 별도의 kubectl 파드를 생성하고, 해당 파드에 SA 를 지정하여 권한에 대한 테스트를 진행

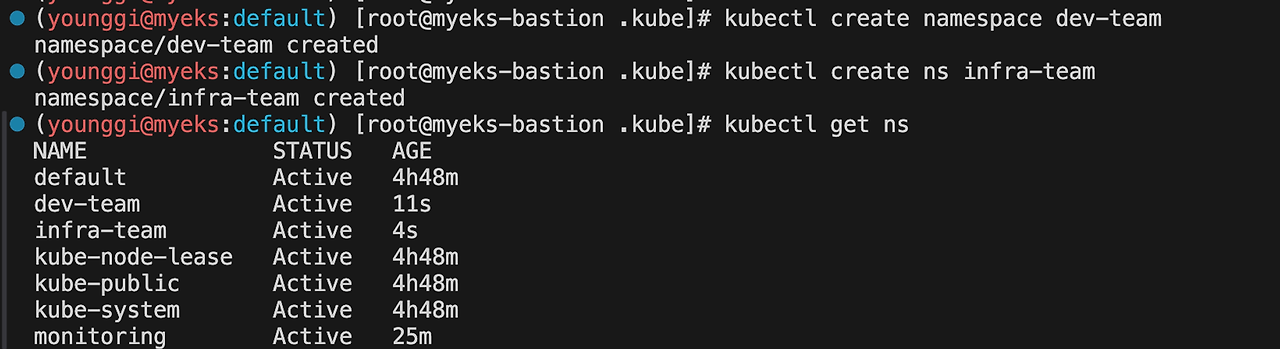

3. ns, sa 생성

# 네임스페이스(Namespace, NS) 생성 및 확인 kubectl create namespace dev-team kubectl create ns infra-team # 네임스페이스 확인 kubectl get ns

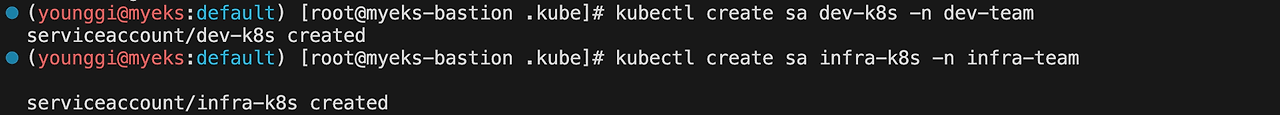

# 네임스페이스에 각각 서비스 어카운트 생성 : serviceaccounts 약자(=sa) kubectl create sa dev-k8s -n dev-team kubectl create sa infra-k8s -n infra-team

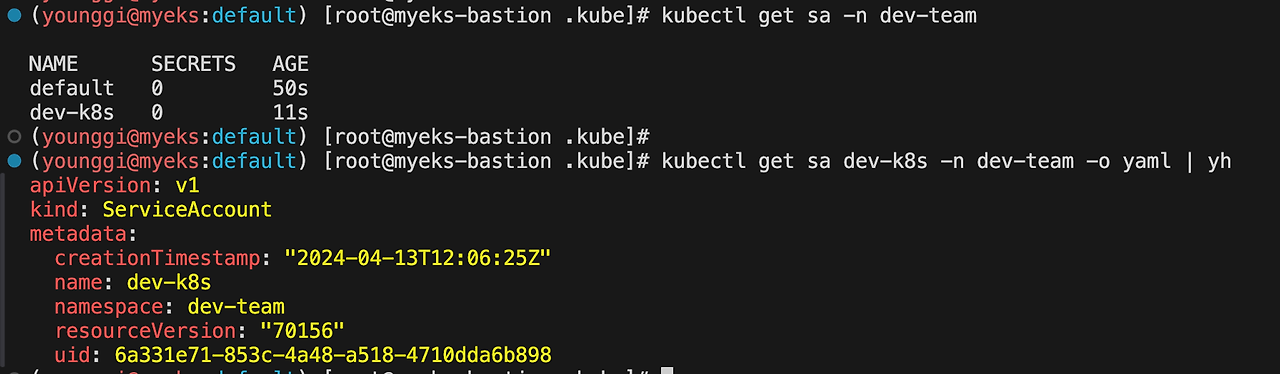

# 서비스 어카운트 정보 확인 kubectl get sa -n dev-team kubectl get sa dev-k8s -n dev-team -o yaml | yh

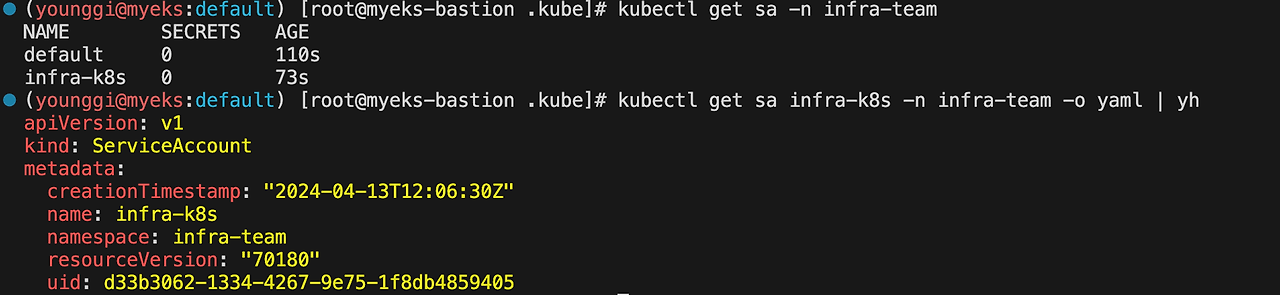

kubectl get sa -n infra-team kubectl get sa infra-k8s -n infra-team -o yaml | yh

4. 서비스 어카운트를 지정하여 파드 생성 후 권한 테스트

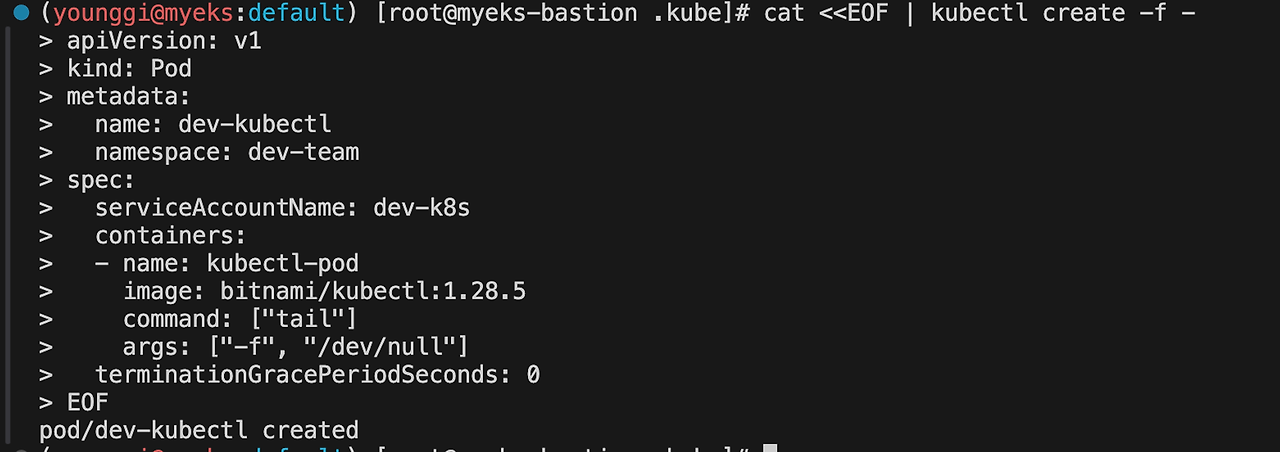

# 각각 네임스피이스에 kubectl 파드 생성 - 컨테이너이미지 # docker run --rm --name kubectl -v /path/to/your/kube/config:/.kube/config bitnami/kubectl:latest cat <<EOF | kubectl create -f - apiVersion: v1 kind: Pod metadata: name: dev-kubectl namespace: dev-team spec: serviceAccountName: dev-k8s containers: - name: kubectl-pod image: bitnami/kubectl:1.28.5 command: ["tail"] args: ["-f", "/dev/null"] terminationGracePeriodSeconds: 0 EOF

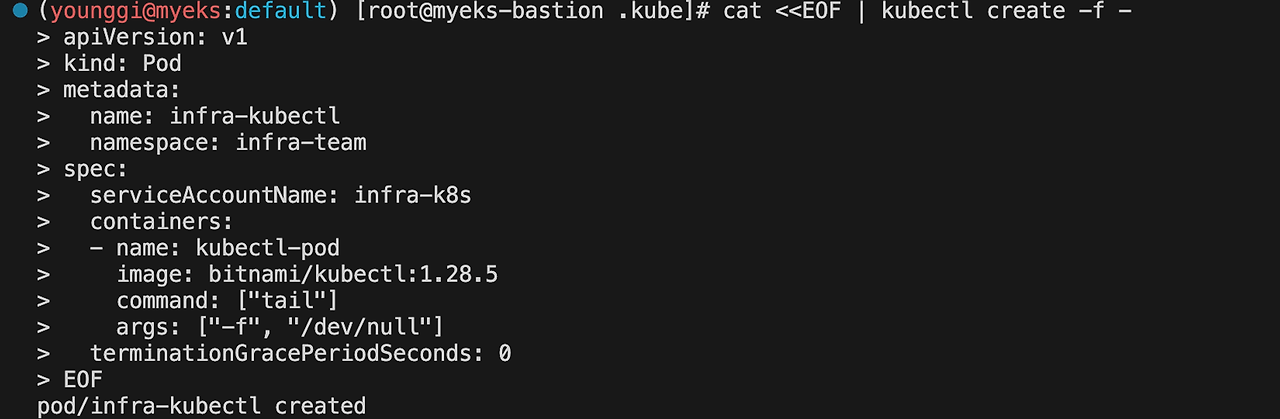

cat <<EOF | kubectl create -f - apiVersion: v1 kind: Pod metadata: name: infra-kubectl namespace: infra-team spec: serviceAccountName: infra-k8s containers: - name: kubectl-pod image: bitnami/kubectl:1.28.5 command: ["tail"] args: ["-f", "/dev/null"] terminationGracePeriodSeconds: 0 EOF

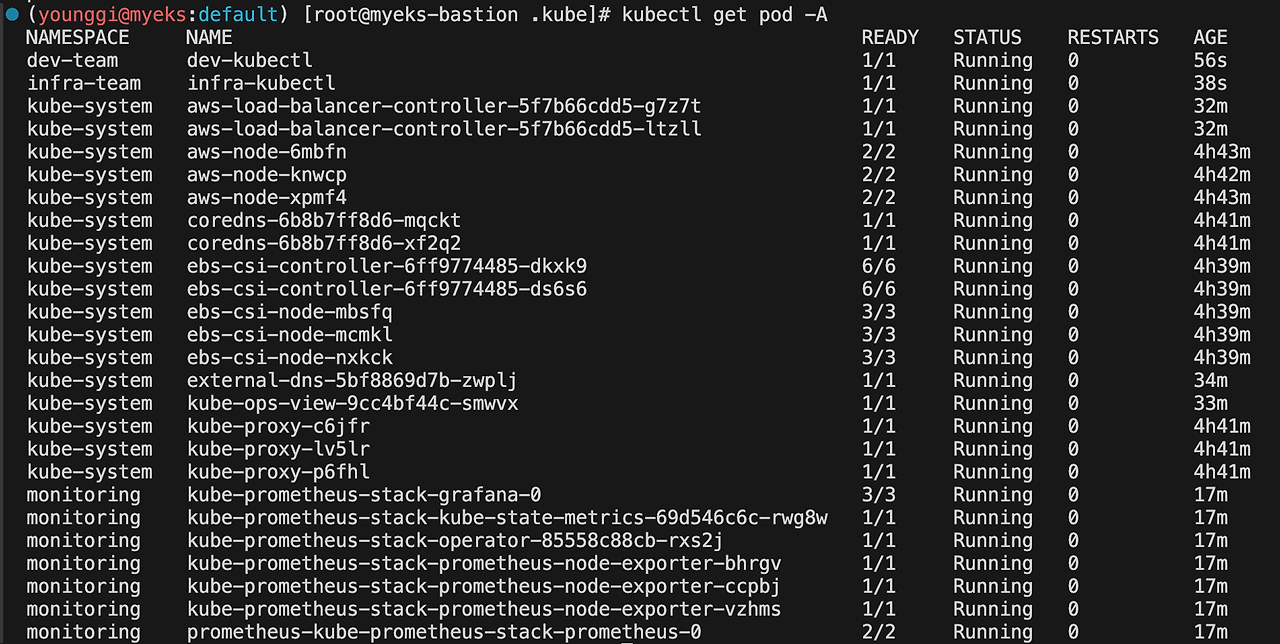

kubectl get pod -A

kubectl get pod -o dev-kubectl -n dev-team -o yaml(younggi@myeks:default) [root@myeks-bastion .kube]# kubectl get pod -o dev-kubectl -n dev-team -o yaml apiVersion: v1 items: - apiVersion: v1 kind: Pod metadata: creationTimestamp: "2024-04-13T12:09:38Z" name: dev-kubectl namespace: dev-team resourceVersion: "71133" uid: 4644861c-9556-4d3c-8add-100a1137e791 spec: containers: - args: - -f - /dev/null command: - tail image: bitnami/kubectl:1.28.5 imagePullPolicy: IfNotPresent name: kubectl-pod resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-6fw7w readOnly: true dnsPolicy: ClusterFirst enableServiceLinks: true nodeName: ip-192-168-1-170.ec2.internal preemptionPolicy: PreemptLowerPriority priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: dev-k8s serviceAccountName: dev-k8s terminationGracePeriodSeconds: 0 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300 volumes: - name: kube-api-access-6fw7w projected: defaultMode: 420 sources: - serviceAccountToken: expirationSeconds: 3607 path: token - configMap: items: - key: ca.crt path: ca.crt name: kube-root-ca.crt - downwardAPI: items: - fieldRef: apiVersion: v1 fieldPath: metadata.namespace path: namespace status: conditions: - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:38Z" status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:43Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:43Z" status: "True" type: ContainersReady - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:38Z" status: "True" type: PodScheduled containerStatuses: - containerID: containerd://0d17840ce53ae1316a11ab18284e1c978078da4c2eb38927913585e928ccb6d5 image: docker.io/bitnami/kubectl:1.28.5 imageID: docker.io/bitnami/kubectl@sha256:cfd03da61658004f1615e5401ba8bde3cc4ba3f87afff0ed8875c5d1b0b09e4a lastState: {} name: kubectl-pod ready: true restartCount: 0 started: true state: running: startedAt: "2024-04-13T12:09:43Z" hostIP: 192.168.1.170 phase: Running podIP: 192.168.1.86 podIPs: - ip: 192.168.1.86 qosClass: BestEffort startTime: "2024-04-13T12:09:38Z" kind: List metadata: resourceVersion: ""kubectl get pod -o infra-kubectl -n infra-team -o yaml(younggi@myeks:default) [root@myeks-bastion .kube]# kubectl get pod -o infra-kubectl -n infra-team -o yaml apiVersion: v1 items: - apiVersion: v1 kind: Pod metadata: creationTimestamp: "2024-04-13T12:09:56Z" name: infra-kubectl namespace: infra-team resourceVersion: "71237" uid: f7165574-ae58-4b67-8427-237e6f4bd9bf spec: containers: - args: - -f - /dev/null command: - tail image: bitnami/kubectl:1.28.5 imagePullPolicy: IfNotPresent name: kubectl-pod resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-2nz87 readOnly: true dnsPolicy: ClusterFirst enableServiceLinks: true nodeName: ip-192-168-3-39.ec2.internal preemptionPolicy: PreemptLowerPriority priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: infra-k8s serviceAccountName: infra-k8s terminationGracePeriodSeconds: 0 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300 volumes: - name: kube-api-access-2nz87 projected: defaultMode: 420 sources: - serviceAccountToken: expirationSeconds: 3607 path: token - configMap: items: - key: ca.crt path: ca.crt name: kube-root-ca.crt - downwardAPI: items: - fieldRef: apiVersion: v1 fieldPath: metadata.namespace path: namespace status: conditions: - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:56Z" status: "True" type: Initialized - lastProbeTime: null lastTransitionTime: "2024-04-13T12:10:03Z" status: "True" type: Ready - lastProbeTime: null lastTransitionTime: "2024-04-13T12:10:03Z" status: "True" type: ContainersReady - lastProbeTime: null lastTransitionTime: "2024-04-13T12:09:56Z" status: "True" type: PodScheduled containerStatuses: - containerID: containerd://f38dc0e26e3aada63b6f10b10f0abf9d076a0240a8efd2f73f8af63217fcc2bd image: docker.io/bitnami/kubectl:1.28.5 imageID: docker.io/bitnami/kubectl@sha256:cfd03da61658004f1615e5401ba8bde3cc4ba3f87afff0ed8875c5d1b0b09e4a lastState: {} name: kubectl-pod ready: true restartCount: 0 started: true state: running: startedAt: "2024-04-13T12:10:02Z" hostIP: 192.168.3.39 phase: Running podIP: 192.168.3.131 podIPs: - ip: 192.168.3.131 qosClass: BestEffort startTime: "2024-04-13T12:09:56Z" kind: List metadata: resourceVersion: ""5. 파드에 기본 적용되는 서비스 어카운트(토큰) 정보 확인

kubectl exec -it dev-kubectl -n dev-team -- ls /run/secrets/kubernetes.io/serviceaccount

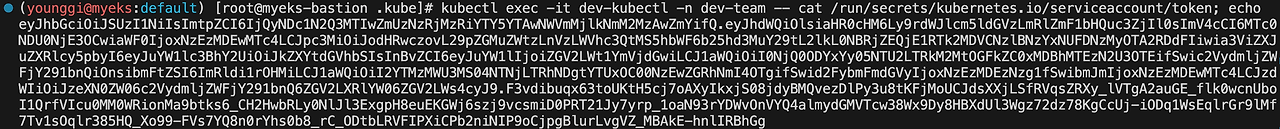

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/token

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/namespace

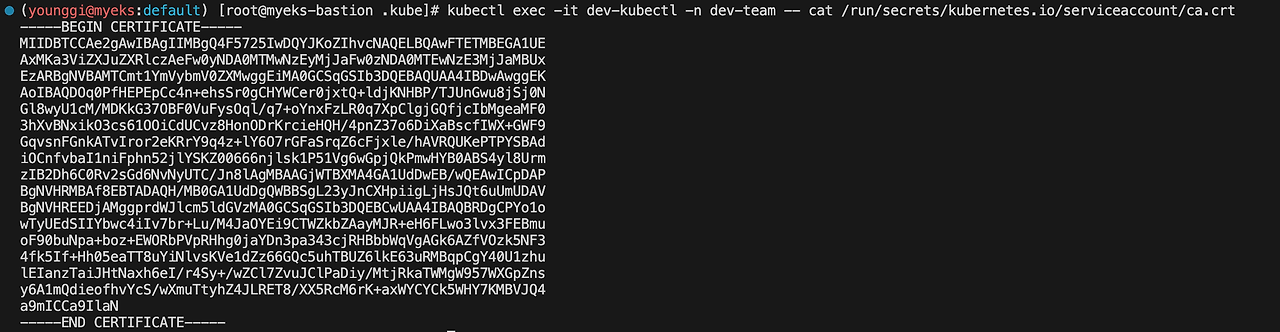

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/ca.crt

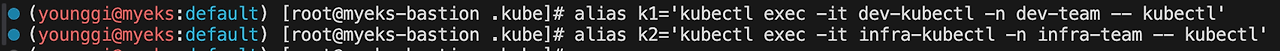

6. 각각 파드로 Shell 접속하여 정보 확인 : 단축 명령어(alias) 사용

alias k1='kubectl exec -it dev-kubectl -n dev-team -- kubectl' alias k2='kubectl exec -it infra-kubectl -n infra-team -- kubectl'

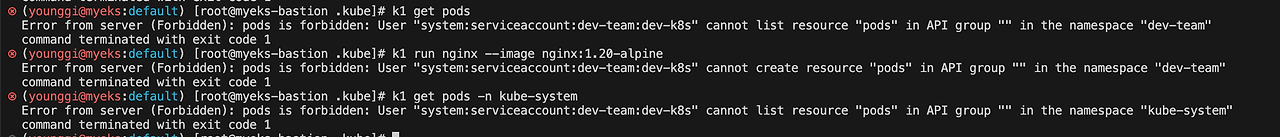

k1 get pods k1 run nginx --image nginx:1.20-alpine k1 get pods -n kube-system

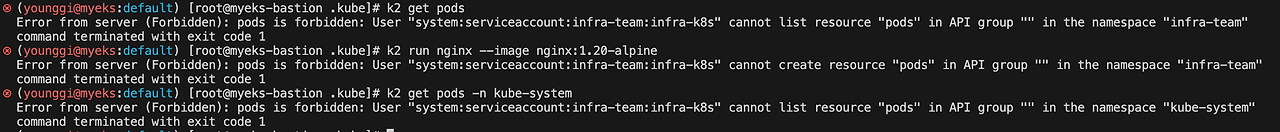

k2 get pods k2 run nginx --image nginx:1.20-alpine k2 get pods -n kube-system

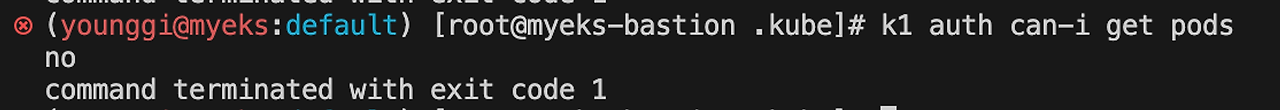

# (옵션) kubectl auth can-i 로 kubectl 실행 사용자가 특정 권한을 가졌는지 확인

k1 auth can-i get pods

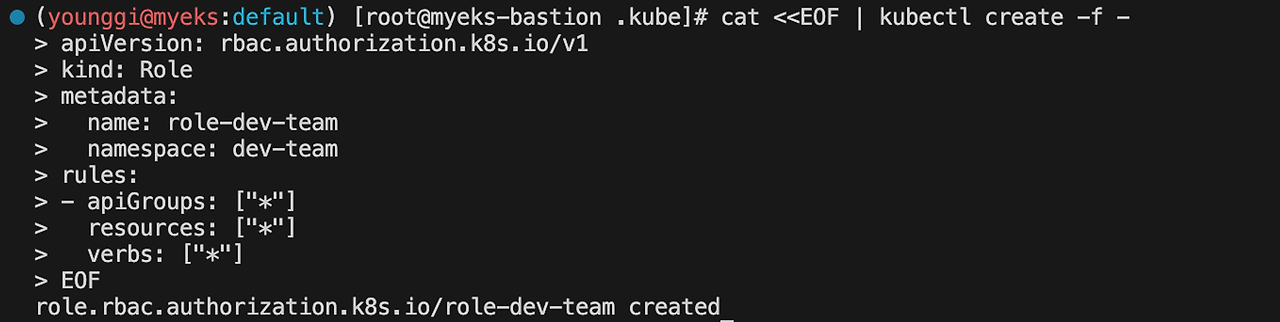

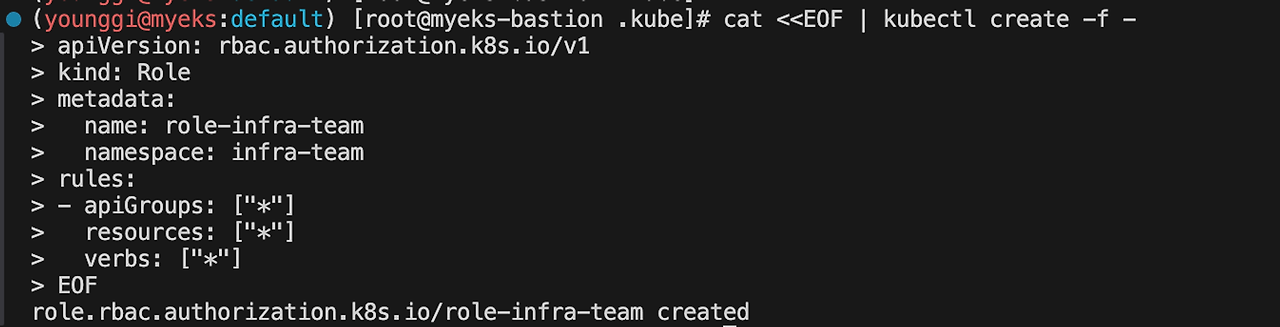

7. 각각 네임스페이스내의 모든 권한에 대한 롤 생성

cat <<EOF | kubectl create -f - apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: role-dev-team namespace: dev-team rules: - apiGroups: ["*"] resources: ["*"] verbs: ["*"] EOF

cat <<EOF | kubectl create -f - apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: role-infra-team namespace: infra-team rules: - apiGroups: ["*"] resources: ["*"] verbs: ["*"] EOF

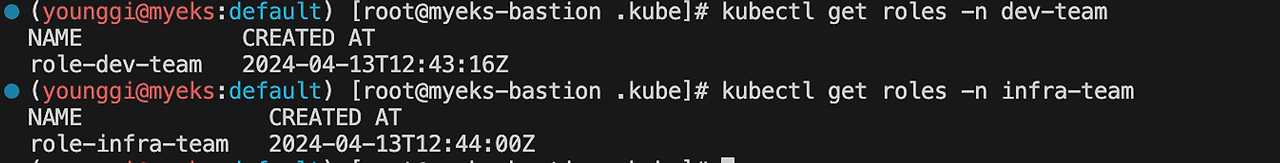

룰 확인

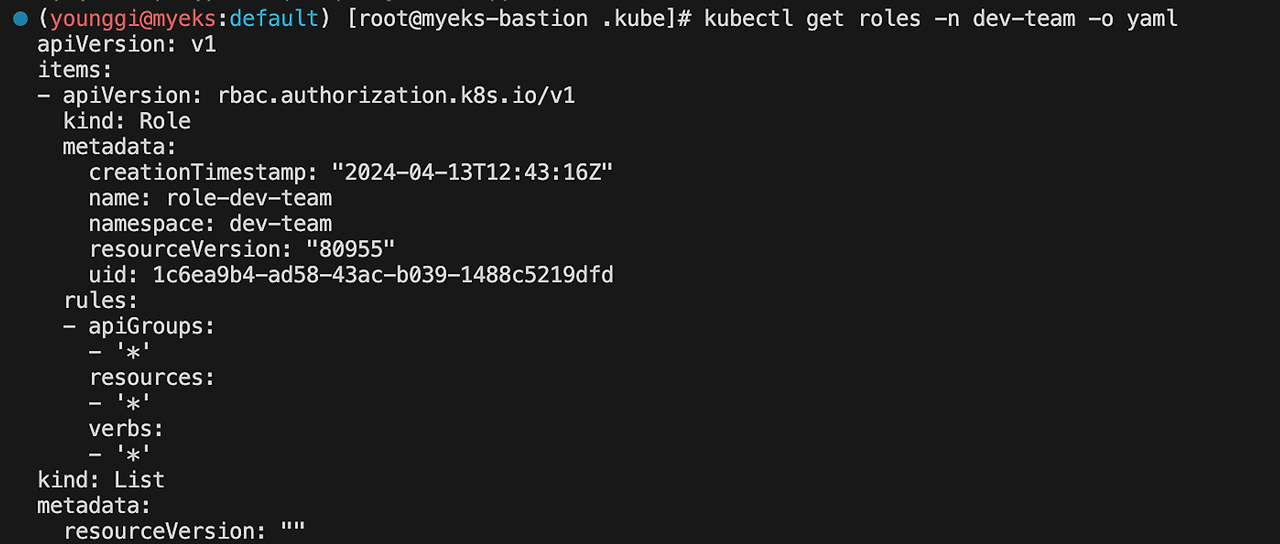

kubectl get roles -n dev-team kubectl get roles -n infra-team

kubectl get roles -n dev-team -o yaml

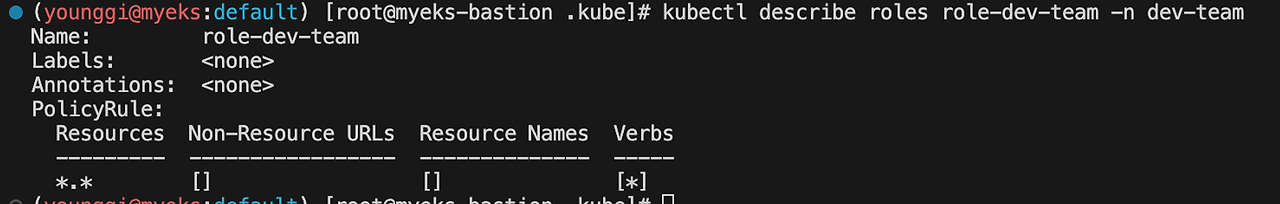

kubectl describe roles role-dev-team -n dev-tea

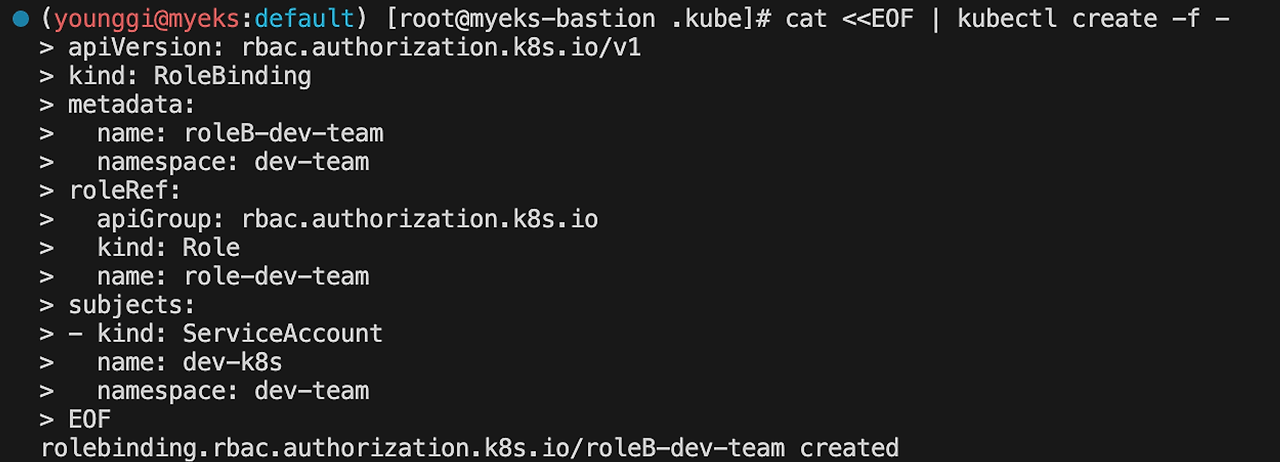

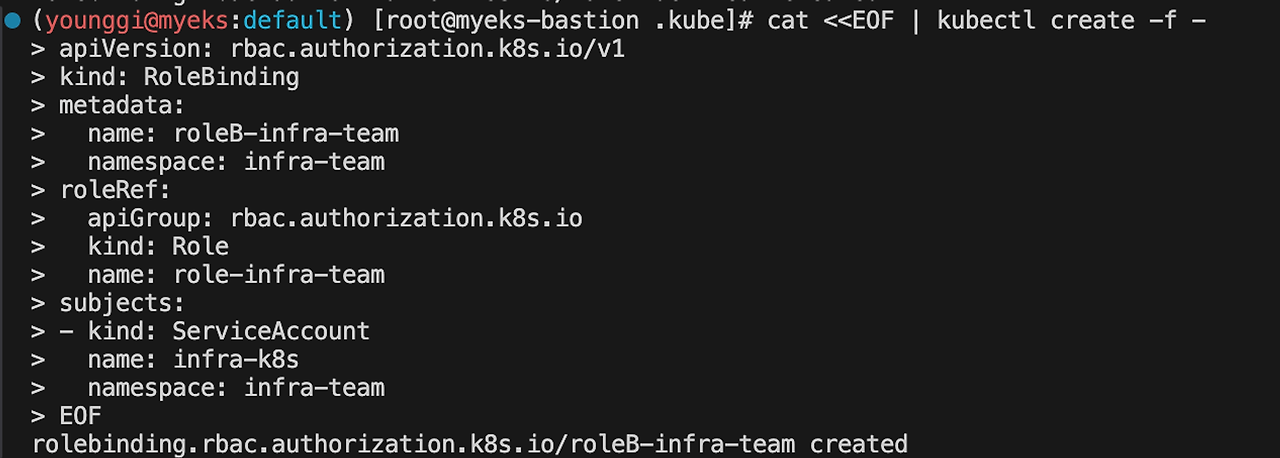

8. 롤바인딩 생성 : '서비스어카운트 <-> 롤' 간 서로 연동

cat <<EOF | kubectl create -f - apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: roleB-dev-team namespace: dev-team roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: role-dev-team subjects: - kind: ServiceAccount name: dev-k8s namespace: dev-team EOF

cat <<EOF | kubectl create -f - apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: roleB-infra-team namespace: infra-team roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: role-infra-team subjects: - kind: ServiceAccount name: infra-k8s namespace: infra-team EOF

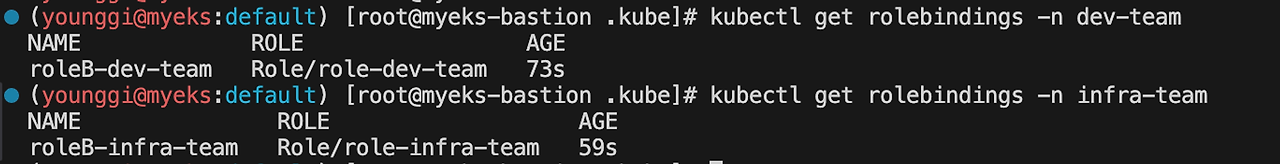

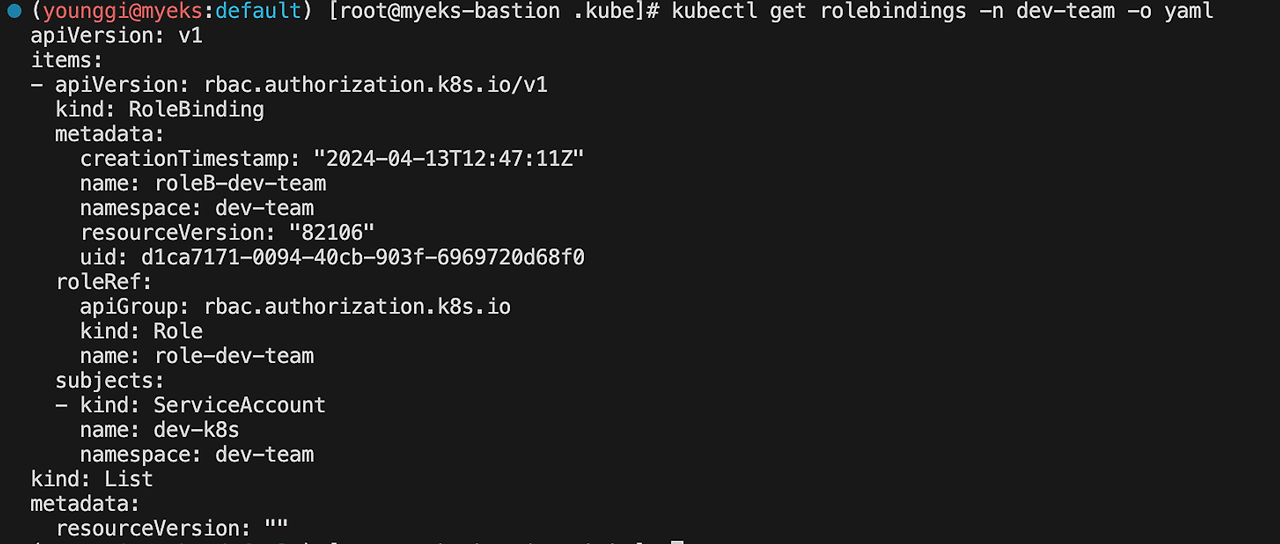

롤바인딩 확인

kubectl get rolebindings -n dev-team kubectl get rolebindings -n infra-team

kubectl get rolebindings -n dev-team -o yaml

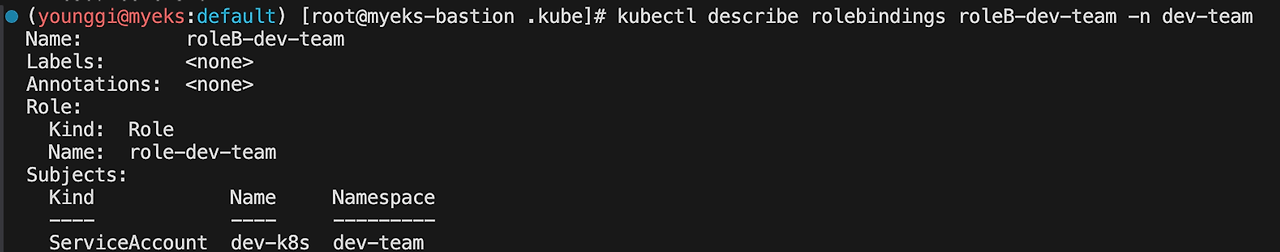

kubectl describe rolebindings roleB-dev-team -n dev-team

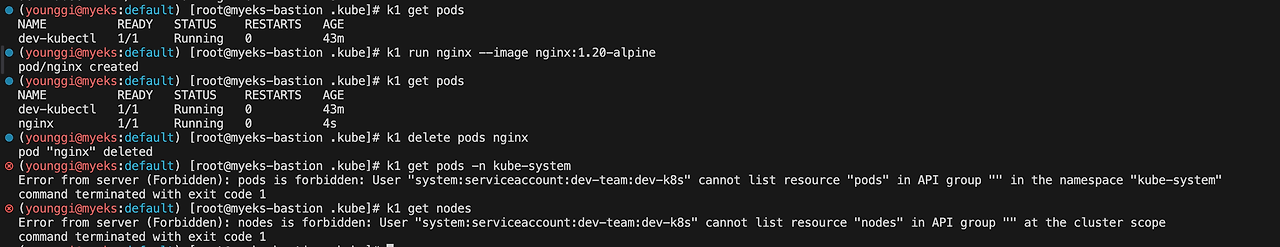

9. 서비스 어카운트를 지정하여 생성한 파드에서 다시 권한 테스트

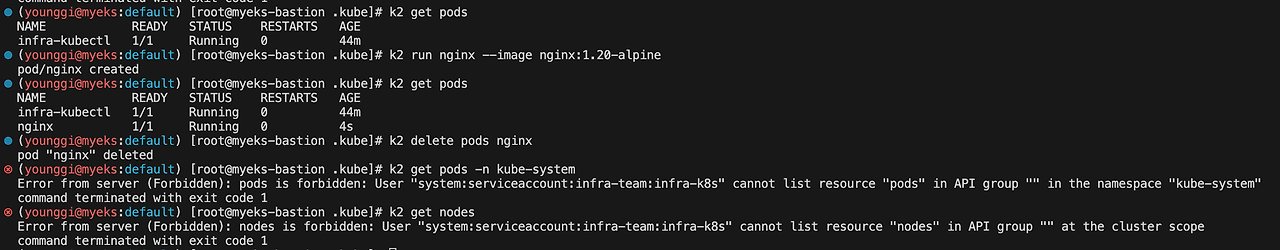

alias k1='kubectl exec -it dev-kubectl -n dev-team -- kubectl' alias k2='kubectl exec -it infra-kubectl -n infra-team -- kubectl' k1 get pods k1 run nginx --image nginx:1.20-alpine k1 get pods k1 delete pods nginx k1 get pods -n kube-system k1 get nodes

k2 get pods k2 run nginx --image nginx:1.20-alpine k2 get pods k2 delete pods nginx k2 get pods -n kube-system k2 get nodes

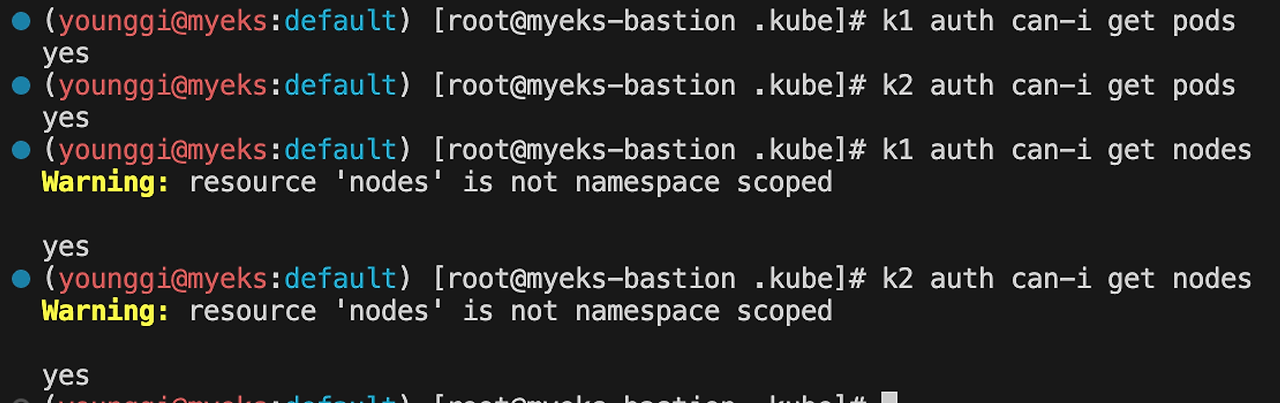

k1 auth can-i get pods k2 auth can-i get pods k1 auth can-i get nodes k2 auth can-i get nodes

10. 리소스 삭제

kubectl delete ns dev-team infra-team 다음글이전글이전 글이 없습니다.댓글

다음글이전글이전 글이 없습니다.댓글

스킨 업데이트 안내

현재 이용하고 계신 스킨의 버전보다 더 높은 최신 버전이 감지 되었습니다. 최신버전 스킨 파일을 다운로드 받을 수 있는 페이지로 이동하시겠습니까?

("아니오" 를 선택할 시 30일 동안 최신 버전이 감지되어도 모달 창이 표시되지 않습니다.)