- Karpenter 실습2024년 04월 07일

- yeongki0944

- 작성자

- 2024.04.07.:46

1. EKS (karpenter-preconfig.yaml)

karpenter-preconfig-us-east-1.yaml0.01MB2. 사전 확인 & eks-node-viewer 설치

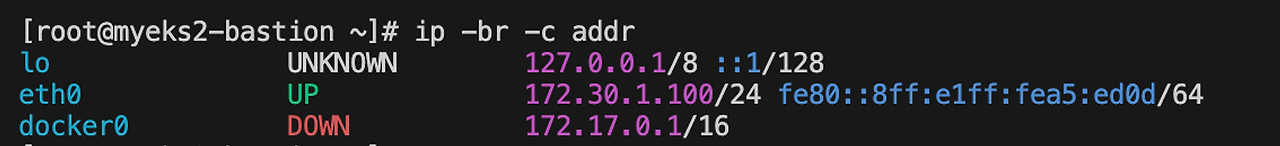

ip -br -c addr

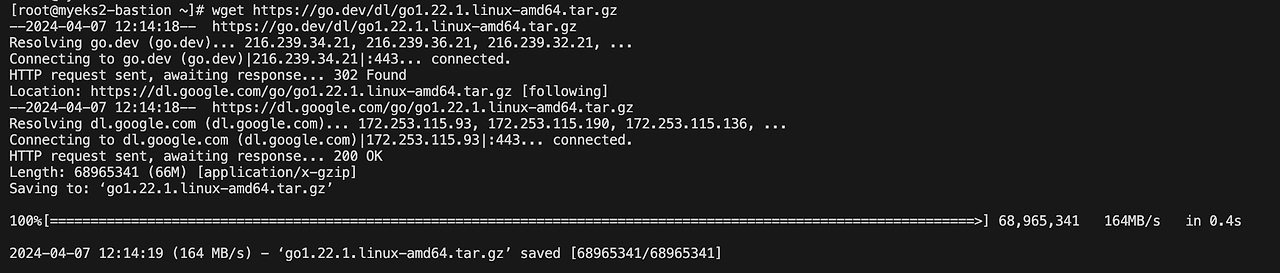

# EKS Node Viewer 설치 : 현재 ec2 spec에서는 설치에 다소 시간이 소요됨 = 2분 이상 wget https://go.dev/dl/go1.22.1.linux-amd64.tar.gz tar -C /usr/local -xzf go1.22.1.linux-amd64.tar.gz export PATH=$PATH:/usr/local/go/bin go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

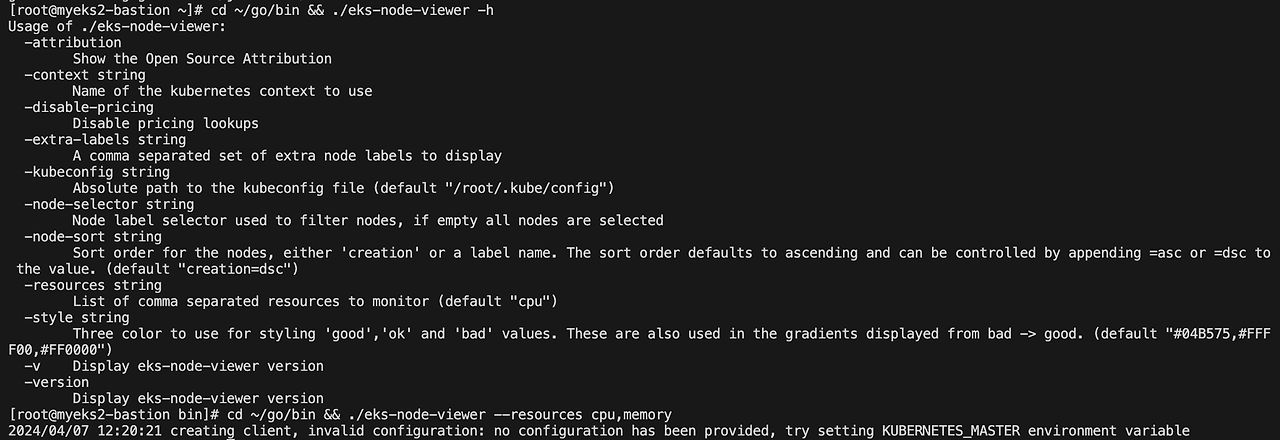

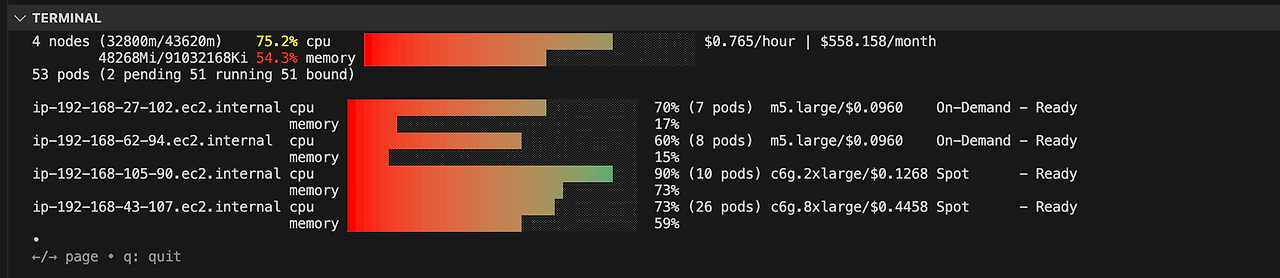

# [터미널1] bin 확인 cd ~/go/bin && ./eks-node-viewer -h # EKS 배포 완료 후 실행 하자 cd ~/go/bin && ./eks-node-viewer --resources cpu,memory

3. EKS 배포

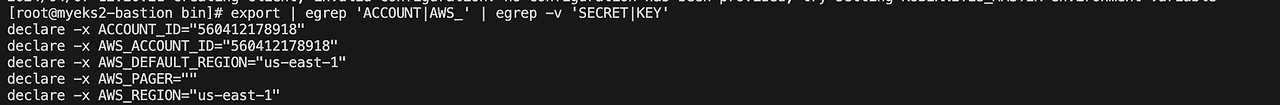

# 변수 정보 확인 export | egrep 'ACCOUNT|AWS_' | egrep -v 'SECRET|KEY'

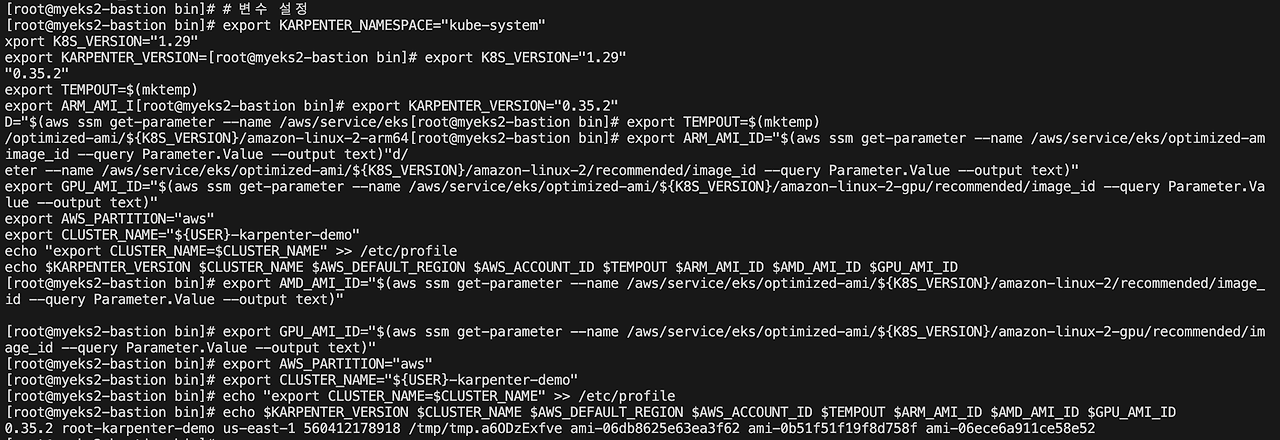

# 변수 설정 export KARPENTER_NAMESPACE="kube-system" export K8S_VERSION="1.29" export KARPENTER_VERSION="0.35.2" export TEMPOUT=$(mktemp) export ARM_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-arm64/recommended/image_id --query Parameter.Value --output text)" export AMD_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2/recommended/image_id --query Parameter.Value --output text)" export GPU_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-gpu/recommended/image_id --query Parameter.Value --output text)" export AWS_PARTITION="aws" export CLUSTER_NAME="${USER}-karpenter-demo" echo "export CLUSTER_NAME=$CLUSTER_NAME" >> /etc/profile echo $KARPENTER_VERSION $CLUSTER_NAME $AWS_DEFAULT_REGION $AWS_ACCOUNT_ID $TEMPOUT $ARM_AMI_ID $AMD_AMI_ID $GPU_AMI_ID

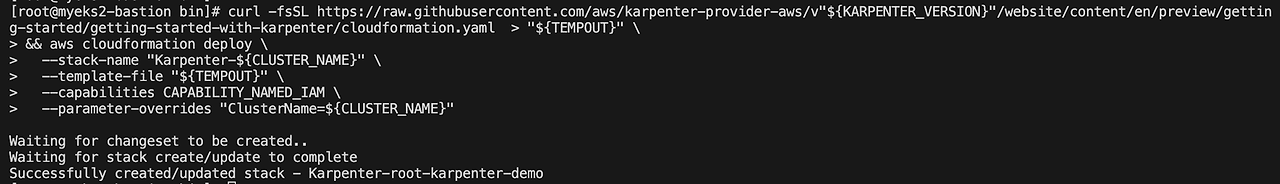

# CloudFormation 스택으로 IAM Policy, Role(KarpenterNodeRole-myeks2) 생성 : 3분 정도 소요 curl -fsSL https://raw.githubusercontent.com/aws/karpenter-provider-aws/v"${KARPENTER_VERSION}"/website/content/en/preview/getting-started/getting-started-with-karpenter/cloudformation.yaml > "${TEMPOUT}" \ && aws cloudformation deploy \ --stack-name "Karpenter-${CLUSTER_NAME}" \ --template-file "${TEMPOUT}" \ --capabilities CAPABILITY_NAMED_IAM \ --parameter-overrides "ClusterName=${CLUSTER_NAME}"

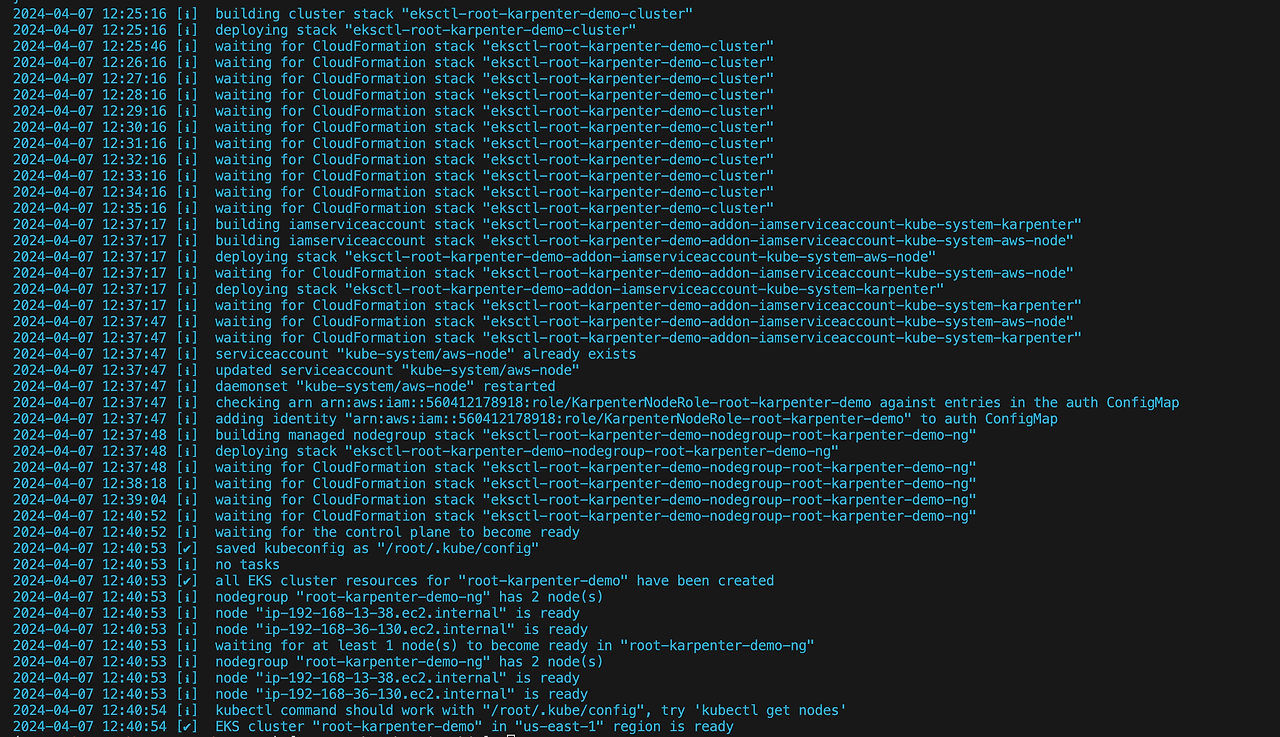

# 클러스터 생성 : myeks2 EKS 클러스터 생성 19분 정도 소요 eksctl create cluster -f - <<EOF --- apiVersion: eksctl.io/v1alpha5 kind: ClusterConfig metadata: name: ${CLUSTER_NAME} region: ${AWS_DEFAULT_REGION} version: "${K8S_VERSION}" tags: karpenter.sh/discovery: ${CLUSTER_NAME} iam: withOIDC: true serviceAccounts: - metadata: name: karpenter namespace: "${KARPENTER_NAMESPACE}" roleName: ${CLUSTER_NAME}-karpenter attachPolicyARNs: - arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME} roleOnly: true iamIdentityMappings: - arn: "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}" username: system:node:{{EC2PrivateDNSName}} groups: - system:bootstrappers - system:nodes managedNodeGroups: - instanceType: m5.large amiFamily: AmazonLinux2 name: ${CLUSTER_NAME}-ng desiredCapacity: 2 minSize: 1 maxSize: 10 iam: withAddonPolicies: externalDNS: true EOF

# eks 배포 확인 eksctl get cluster eksctl get nodegroup --cluster $CLUSTER_NAME

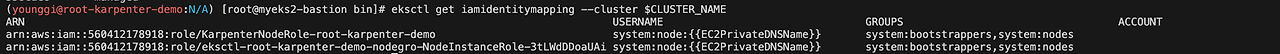

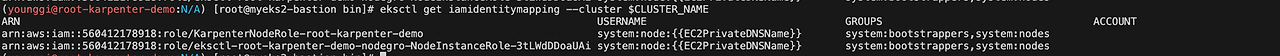

eksctl get iamidentitymapping --cluster $CLUSTER_NAME

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

eksctl get addon --cluster $CLUSTER_NAME

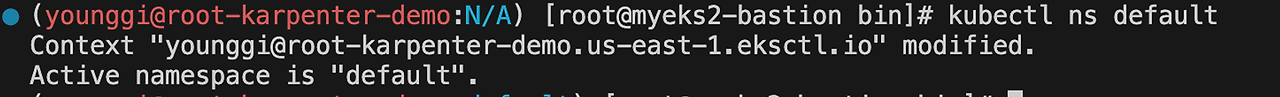

# default 네임스페이스 적용 kubectl ns default

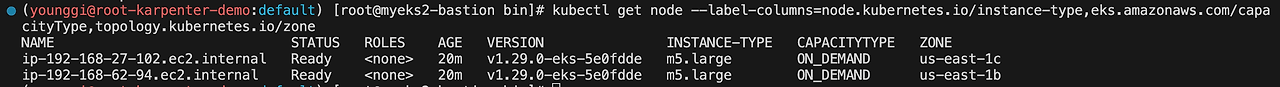

# 노드 정보 확인 kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

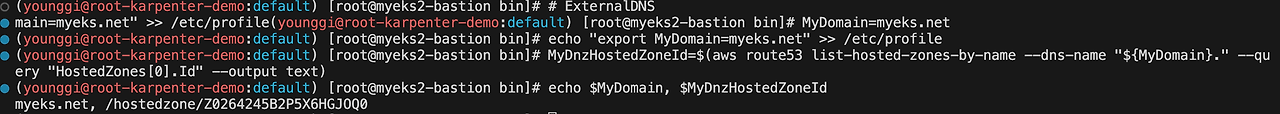

# ExternalDNS MyDomain=myeks.net echo "export MyDomain=myeks.net" >> /etc/profile MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text) echo $MyDomain, $MyDnzHostedZoneId

# kube-ops-view helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="As/Seoul" --namespace kube-system kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}' kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain" echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"4. grafana, prometheus 설치

# 그라파나 설치 curl -fsSL https://raw.githubusercontent.com/aws/karpenter-provider-aws/v"${KARPENTER_VERSION}"/website/content/en/preview/getting-started/getting-started-with-karpenter/grafana-values.yaml | tee grafana-values.yaml helm install --namespace monitoring grafana grafana-charts/grafana --values grafana-values.yaml kubectl patch svc -n monitoring grafana -p '{"spec":{"type":"LoadBalancer"}}' # admin 암호 kubectl get secret --namespace monitoring grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo # 그라파나 접속 kubectl annotate service grafana -n monitoring "external-dns.alpha.kubernetes.io/hostname=grafana.$MyDomain" echo -e "grafana URL = http://grafana.$MyDomain"5. Create NodePool

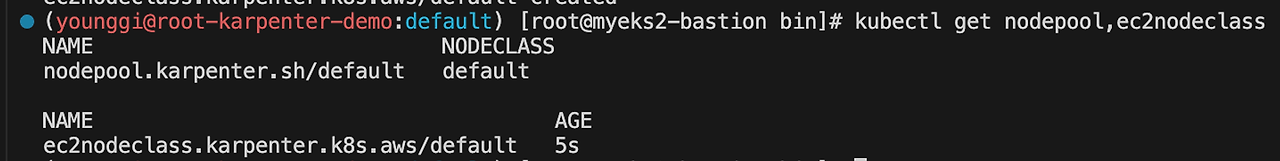

cat <<EOF | envsubst | kubectl apply -f - apiVersion: karpenter.sh/v1beta1 kind: NodePool metadata: name: default spec: template: spec: requirements: - key: kubernetes.io/arch operator: In values: ["amd64"] - key: kubernetes.io/os operator: In values: ["linux"] - key: karpenter.sh/capacity-type operator: In values: ["spot"] - key: karpenter.k8s.aws/instance-category operator: In values: ["c", "m", "r"] - key: karpenter.k8s.aws/instance-generation operator: Gt values: ["2"] nodeClassRef: apiVersion: karpenter.k8s.aws/v1beta1 kind: EC2NodeClass name: default limits: cpu: 1000 disruption: consolidationPolicy: WhenUnderutilized expireAfter: 720h # 30 * 24h = 720h --- apiVersion: karpenter.k8s.aws/v1beta1 kind: EC2NodeClass metadata: name: default spec: amiFamily: AL2 # Amazon Linux 2 role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name subnetSelectorTerms: - tags: karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name securityGroupSelectorTerms: - tags: karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name amiSelectorTerms: - id: "${ARM_AMI_ID}" - id: "${AMD_AMI_ID}" # - id: "${GPU_AMI_ID}" # <- GPU Optimized AMD AMI # - name: "amazon-eks-node-${K8S_VERSION}-*" # <- automatically upgrade when a new AL2 EKS Optimized AMI is released. This is unsafe for production workloads. Validate AMIs in lower environments before deploying them to production. EOF # 확인 kubectl get nodepool,ec2nodeclass

6. Sacle up deployment

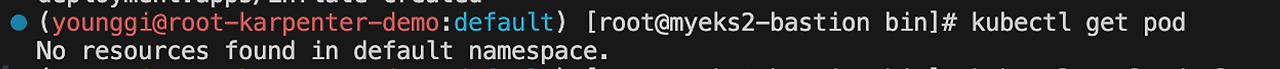

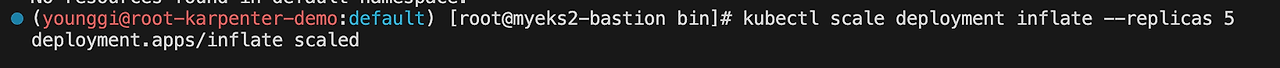

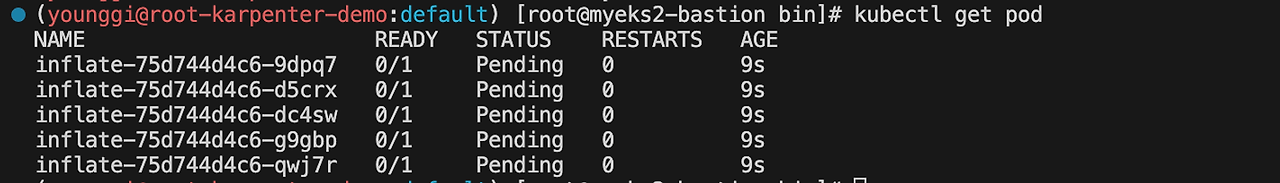

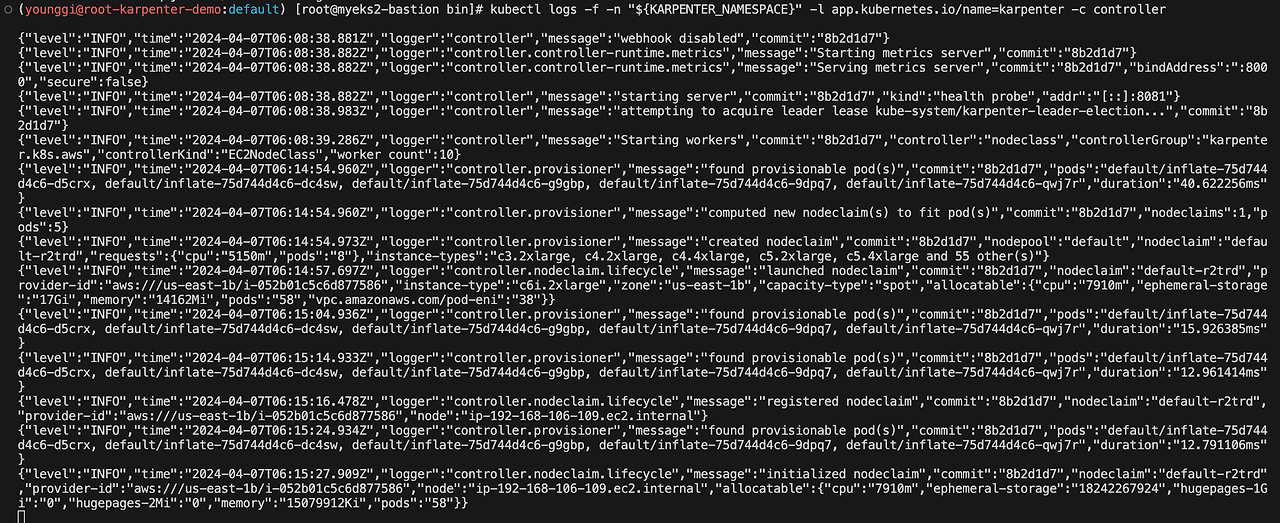

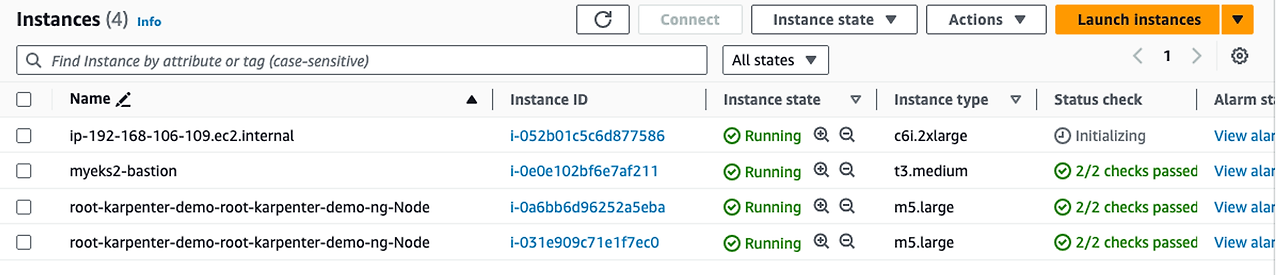

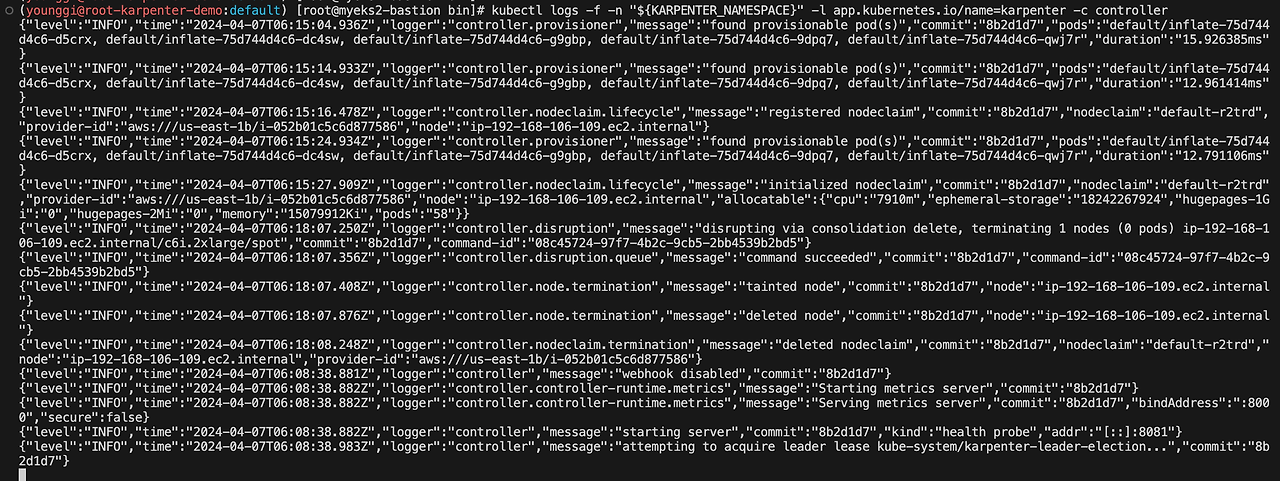

# pause 파드 1개에 CPU 1개 최소 보장 할당 cat <<EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: inflate spec: replicas: 0 selector: matchLabels: app: inflate template: metadata: labels: app: inflate spec: terminationGracePeriodSeconds: 0 containers: - name: inflate image: public.ecr.aws/eks-distro/kubernetes/pause:3.7 resources: requests: cpu: 1 EOF # Scale up kubectl get pod kubectl scale deployment inflate --replicas 5 kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller | jq '.'kubectl get pod

kubectl scale deployment inflate --replicas 5

kubectl get pod

kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller

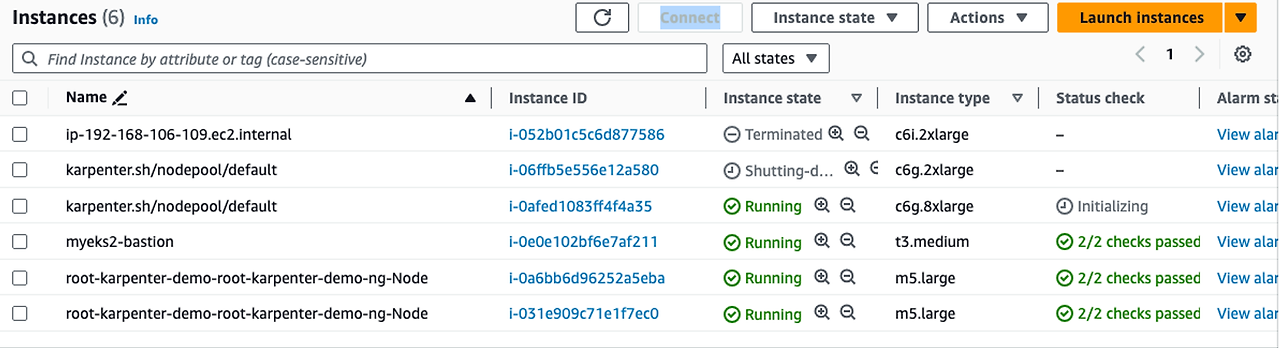

kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller | jq '.'(younggi@root-karpenter-demo:default) [root@myeks2-bastion bin]# kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller | jq '.' { "level": "INFO", "time": "2024-04-07T06:08:39.286Z", "logger": "controller", "message": "Starting workers", "commit": "8b2d1d7", "controller": "nodeclass", "controllerGroup": "karpenter.k8s.aws", "controllerKind": "EC2NodeClass", "worker count": 10 } { "level": "INFO", "time": "2024-04-07T06:14:54.960Z", "logger": "controller.provisioner", "message": "found provisionable pod(s)", "commit": "8b2d1d7", "pods": "default/inflate-75d744d4c6-d5crx, default/inflate-75d744d4c6-dc4sw, default/inflate-75d744d4c6-g9gbp, default/inflate-75d744d4c6-9dpq7, default/inflate-75d744d4c6-qwj7r", "duration": "40.622256ms" } { "level": "INFO", "time": "2024-04-07T06:14:54.960Z", "logger": "controller.provisioner", "message": "computed new nodeclaim(s) to fit pod(s)", "commit": "8b2d1d7", "nodeclaims": 1, "pods": 5 } { "level": "INFO", "time": "2024-04-07T06:14:54.973Z", "logger": "controller.provisioner", "message": "created nodeclaim", "commit": "8b2d1d7", "nodepool": "default", "nodeclaim": "default-r2trd", "requests": { "cpu": "5150m", "pods": "8" }, "instance-types": "c3.2xlarge, c4.2xlarge, c4.4xlarge, c5.2xlarge, c5.4xlarge and 55 other(s)" } { "level": "INFO", "time": "2024-04-07T06:14:57.697Z", "logger": "controller.nodeclaim.lifecycle", "message": "launched nodeclaim", "commit": "8b2d1d7", "nodeclaim": "default-r2trd", "provider-id": "aws:///us-east-1b/i-052b01c5c6d877586", "instance-type": "c6i.2xlarge", "zone": "us-east-1b", "capacity-type": "spot", "allocatable": { "cpu": "7910m", "ephemeral-storage": "17Gi", "memory": "14162Mi", "pods": "58", "vpc.amazonaws.com/pod-eni": "38" } } { "level": "INFO", "time": "2024-04-07T06:15:04.936Z", "logger": "controller.provisioner", "message": "found provisionable pod(s)", "commit": "8b2d1d7", "pods": "default/inflate-75d744d4c6-d5crx, default/inflate-75d744d4c6-dc4sw, default/inflate-75d744d4c6-g9gbp, default/inflate-75d744d4c6-9dpq7, default/inflate-75d744d4c6-qwj7r", "duration": "15.926385ms" } { "level": "INFO", "time": "2024-04-07T06:15:14.933Z", "logger": "controller.provisioner", "message": "found provisionable pod(s)", "commit": "8b2d1d7", "pods": "default/inflate-75d744d4c6-d5crx, default/inflate-75d744d4c6-dc4sw, default/inflate-75d744d4c6-g9gbp, default/inflate-75d744d4c6-9dpq7, default/inflate-75d744d4c6-qwj7r", "duration": "12.961414ms" } { "level": "INFO", "time": "2024-04-07T06:15:16.478Z", "logger": "controller.nodeclaim.lifecycle", "message": "registered nodeclaim", "commit": "8b2d1d7", "nodeclaim": "default-r2trd", "provider-id": "aws:///us-east-1b/i-052b01c5c6d877586", "node": "ip-192-168-106-109.ec2.internal" } { "level": "INFO", "time": "2024-04-07T06:15:24.934Z", "logger": "controller.provisioner", "message": "found provisionable pod(s)", "commit": "8b2d1d7", "pods": "default/inflate-75d744d4c6-d5crx, default/inflate-75d744d4c6-dc4sw, default/inflate-75d744d4c6-g9gbp, default/inflate-75d744d4c6-9dpq7, default/inflate-75d744d4c6-qwj7r", "duration": "12.791106ms" } { "level": "INFO", "time": "2024-04-07T06:15:27.909Z", "logger": "controller.nodeclaim.lifecycle", "message": "initialized nodeclaim", "commit": "8b2d1d7", "nodeclaim": "default-r2trd", "provider-id": "aws:///us-east-1b/i-052b01c5c6d877586", "node": "ip-192-168-106-109.ec2.internal", "allocatable": { "cpu": "7910m", "ephemeral-storage": "18242267924", "hugepages-1Gi": "0", "hugepages-2Mi": "0", "memory": "15079912Ki", "pods": "58" } } { "level": "INFO", "time": "2024-04-07T06:08:38.881Z", "logger": "controller", "message": "webhook disabled", "commit": "8b2d1d7" } { "level": "INFO", "time": "2024-04-07T06:08:38.882Z", "logger": "controller.controller-runtime.metrics", "message": "Starting metrics server", "commit": "8b2d1d7" } { "level": "INFO", "time": "2024-04-07T06:08:38.882Z", "logger": "controller.controller-runtime.metrics", "message": "Serving metrics server", "commit": "8b2d1d7", "bindAddress": ":8000", "secure": false } { "level": "INFO", "time": "2024-04-07T06:08:38.882Z", "logger": "controller", "message": "starting server", "commit": "8b2d1d7", "kind": "health probe", "addr": "[::]:8081" } { "level": "INFO", "time": "2024-04-07T06:08:38.983Z", "logger": "controller", "message": "attempting to acquire leader lease kube-system/karpenter-leader-election...", "commit": "8b2d1d7" }

7. Scale down deployment

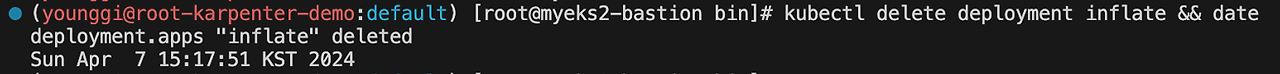

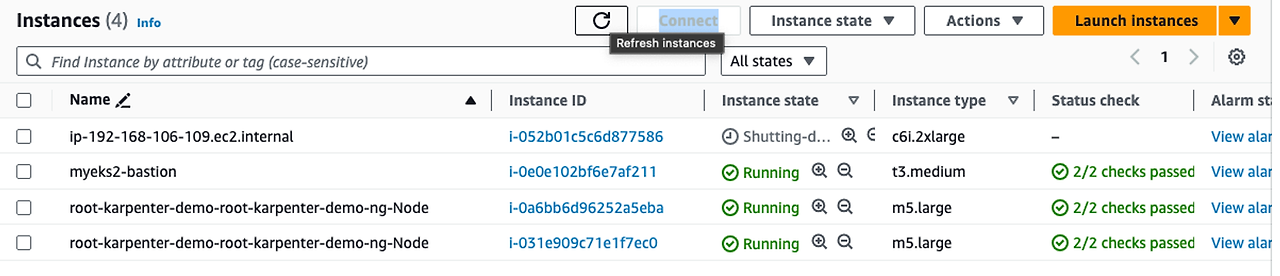

# Now, delete the deployment. After a short amount of time, Karpenter should terminate the empty nodes due to consolidation. kubectl delete deployment inflate && date kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controller

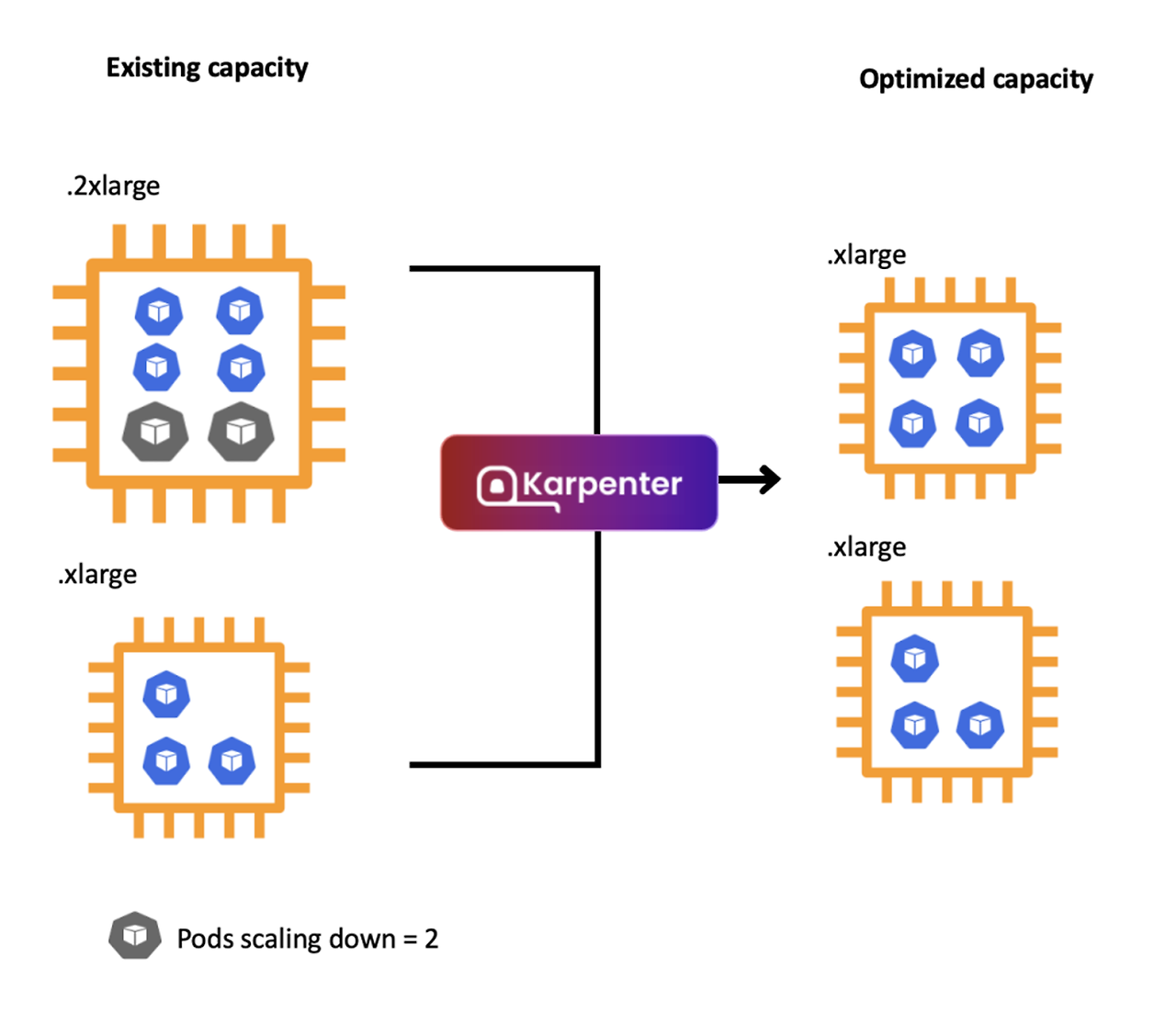

8. Disruption (구 Consolidation)

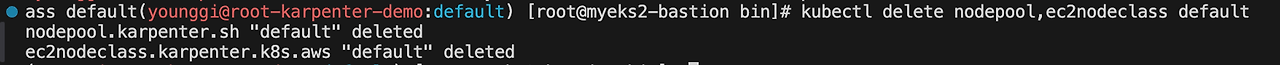

# 기존 nodepool 삭제 kubectl delete nodepool,ec2nodeclass default

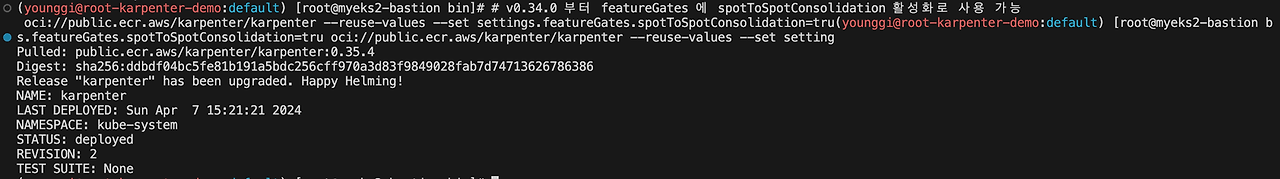

# v0.34.0 부터 featureGates 에 spotToSpotConsolidation 활성화로 사용 가능 helm upgrade karpenter -n kube-system oci://public.ecr.aws/karpenter/karpenter --reuse-values --set settings.featureGates.spotToSpotConsolidation=tru

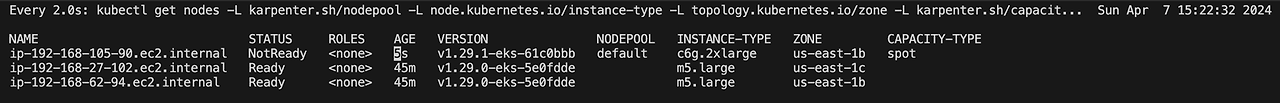

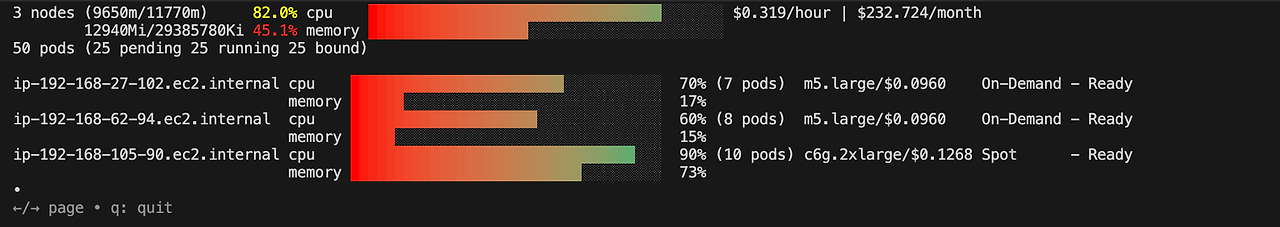

# Create a Karpenter NodePool and EC2NodeClass cat <<EOF > nodepool.yaml apiVersion: karpenter.sh/v1beta1 kind: NodePool metadata: name: default spec: template: metadata: labels: intent: apps spec: nodeClassRef: name: default requirements: - key: karpenter.sh/capacity-type operator: In values: ["spot"] - key: karpenter.k8s.aws/instance-category operator: In values: ["c","m","r"] - key: karpenter.k8s.aws/instance-size operator: NotIn values: ["nano","micro","small","medium"] - key: karpenter.k8s.aws/instance-hypervisor operator: In values: ["nitro"] limits: cpu: 100 memory: 100Gi disruption: consolidationPolicy: WhenUnderutilized --- apiVersion: karpenter.k8s.aws/v1beta1 kind: EC2NodeClass metadata: name: default spec: amiFamily: Bottlerocket subnetSelectorTerms: - tags: karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name securityGroupSelectorTerms: - tags: karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name tags: Name: karpenter.sh/nodepool/default IntentLabel: "apps" EOF kubectl apply -f nodepool.yamlwatch -d "kubectl get nodes -L karpenter.sh/nodepool -L node.kubernetes.io/instance-type -L topology.kubernetes.io/zone -L karpenter.sh/capacity-type" kubectl get nodes -L karpenter.sh/nodepool -L node.kubernetes.io/instance-type -L topology.kubernetes.io/zone -L karpenter.sh/capacity-type

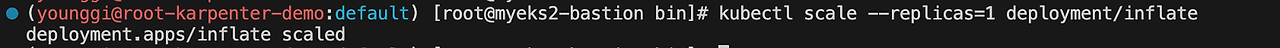

# Scale in a sample workload to observe consolidation # To invoke a Karpenter consolidation event scale, inflate the deployment to 1. Run the following command: kubectl scale --replicas=1 deployment/inflate

# Use kubectl get nodeclaims to list all objects of type NodeClaim and then describe the NodeClaim Kubernetes resource # using kubectl get nodeclaim/<claim-name> -o yaml. # In the NodeClaim .spec.requirements, you can also see the 15 instance types passed to the Amazon EC2 Fleet API: kubectl get nodeclaimskubectl get nodeclaims -o yaml | kubectl neat | yh(younggi@root-karpenter-demo:default) [root@myeks2-bastion bin]# kubectl get nodeclaims -o yaml | kubectl neat | yh apiVersion: v1 items: - apiVersion: karpenter.sh/v1beta1 kind: NodeClaim metadata: annotations: karpenter.k8s.aws/ec2nodeclass-hash: "1498318715957349211" karpenter.k8s.aws/tagged: "true" karpenter.sh/managed-by: root-karpenter-demo karpenter.sh/nodepool-hash: "14513086509224576023" labels: intent: apps karpenter.k8s.aws/instance-category: c karpenter.k8s.aws/instance-cpu: "8" karpenter.k8s.aws/instance-encryption-in-transit-supported: "false" karpenter.k8s.aws/instance-family: c6g karpenter.k8s.aws/instance-generation: "6" karpenter.k8s.aws/instance-hypervisor: nitro karpenter.k8s.aws/instance-memory: "16384" karpenter.k8s.aws/instance-network-bandwidth: "2500" karpenter.k8s.aws/instance-size: 2xlarge karpenter.sh/capacity-type: spot karpenter.sh/nodepool: default kubernetes.io/arch: arm64 kubernetes.io/os: linux node.kubernetes.io/instance-type: c6g.2xlarge topology.kubernetes.io/region: us-east-1 topology.kubernetes.io/zone: us-east-1b name: default-ccccx spec: nodeClassRef: name: default requirements: - key: karpenter.sh/capacity-type operator: In values: - spot - key: node.kubernetes.io/instance-type operator: In values: - c5.2xlarge - c5.4xlarge - c5a.2xlarge - c5a.4xlarge - c5ad.2xlarge - c5d.2xlarge - c5n.2xlarge - c6a.2xlarge - c6a.4xlarge - c6g.2xlarge - c6g.4xlarge - c6gd.2xlarge - c6gd.4xlarge - c6gn.2xlarge - c6gn.4xlarge - c6i.2xlarge - c6i.4xlarge - c6id.2xlarge - c6in.2xlarge - c7a.2xlarge - c7g.2xlarge - c7g.4xlarge - c7gd.2xlarge - c7gd.4xlarge - c7gn.2xlarge - c7i.2xlarge - c7i.4xlarge - m5.2xlarge - m5a.2xlarge - m5a.4xlarge - m5d.2xlarge - m5dn.2xlarge - m5n.2xlarge - m6a.2xlarge - m6g.2xlarge - m6g.4xlarge - m6gd.2xlarge - m6i.2xlarge - m6id.2xlarge - m6idn.2xlarge - m6in.2xlarge - m7a.2xlarge - m7g.2xlarge - m7g.4xlarge - m7gd.2xlarge - m7i-flex.2xlarge - m7i.2xlarge - r5.2xlarge - r5a.2xlarge - r5ad.2xlarge - r5d.2xlarge - r6a.2xlarge - r6g.2xlarge - r6gd.2xlarge - r6i.2xlarge - r6id.2xlarge - r7a.2xlarge - r7g.2xlarge - r7gd.2xlarge - r7i.2xlarge - key: karpenter.k8s.aws/instance-category operator: In values: - c - m - r - key: karpenter.k8s.aws/instance-size operator: NotIn values: - medium - micro - nano - small - key: karpenter.k8s.aws/instance-hypervisor operator: In values: - nitro - key: intent operator: In values: - apps - key: karpenter.sh/nodepool operator: In values: - default resources: requests: cpu: 5150m memory: 7680Mi pods: "8" kind: List metadata: resourceVersion: ""- 50개 증가

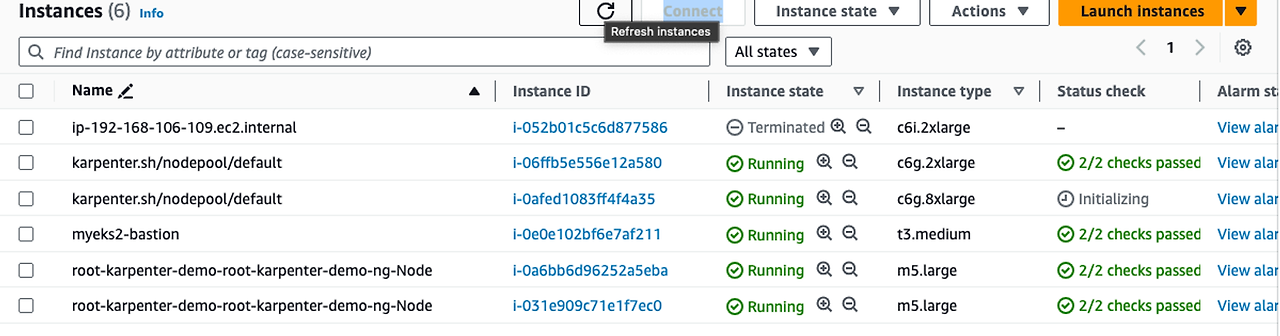

c6g.8xlarge 생성 > c6g.2xlarge 종료 > pod이동

다음글이전글이전 글이 없습니다.댓글

다음글이전글이전 글이 없습니다.댓글

스킨 업데이트 안내

현재 이용하고 계신 스킨의 버전보다 더 높은 최신 버전이 감지 되었습니다. 최신버전 스킨 파일을 다운로드 받을 수 있는 페이지로 이동하시겠습니까?

("아니오" 를 선택할 시 30일 동안 최신 버전이 감지되어도 모달 창이 표시되지 않습니다.)